There must be a better way to automate integration testing

After years of trying to automate integration tests, I think a better way would be for a robot to test the product through the actual user interface clicking/tapping as a user would, seeing/hearing/reading through the display as a user would, and thinking as a user would.

In this post, I'll explain the diversity of issues I've faced trying to automate software, all of which would be much simpler if a robot tested directly through the user interface. A lot of people think this kind of testing is so costly that it's better just to do unit testing and manual testing. In my experience, the benefit of fully automated integration tests is much greater than what some people think. So if we made integration testing easier, I think we'd enjoy higher-quality software across the board.

1. Tap or click like a human

2. See, hear, read braille, etc. like a human

3. Think like a human...but not too much ;-)

Examples of Challenges of Test Automation

Below I'll share three experiences to illustrate the diversity of the issues we face when trying to automate integration tests.1. Mobile app

I work on a map application. While navigating to a destination, users should be able to swipe around to look at cafes and restaurants they might walk past. When they swipe away from the navigation line, the current location icon should change to indicate that the screen is not centered on the user's location. The automation for this test worked for a couple of years and then suddenly broke when the makers of the test infrastructure, Espresso, changed something that broke the swiping test. Below is a screenshot showing what used to work and what no longer does, and here is the full video.

To be clear, an end-user swipe behaves exactly as it should, there is no bug. But when the test code swipes, it doesn't. Something about the way Espresso emulates probably differs sufficiently from an end-user swipe that the application does not know the user swiped so it doesn't change the current location icon from solid blue to hollow.

Again, if the test were performed by a robot interacting through the user interface, then nothing would get short-circuited and the test would pass as it should. Thankfully, people are working on robotics to test mobile apps by physically tapping the screen, check out Tapster, “We make robots to test mobile devices." from Jason Huggins, founder of Selenium, Appium, and Sauce Labs.

2. Web app

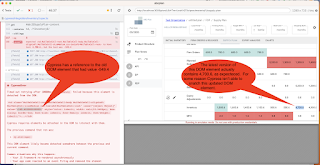

In this example, we'll look at how imperceptible timing differences can make it appear that a test has failed when from an end-user perspective, nothing is wrong. The screenshot below shows a web application tested with the popular Cypress test framework. On the left, the test code thinks the test failed. However, the root cause is it read an old value from the screen, -549.4 whereas the screen clearly shows 4,700.6, the correct value:

The root cause is the way Cypress works. With Cypress, you tell it a place to look on the screen, a selector. And when it finds that selector, it performs a validation. If the selector doesn't find what you told it to look for, it keeps trying. In this case, however, we're trying to detect a change in a particular cell in a table. When the user clicks a button, the cell value should change. In other words, the selector will continually find the cell on the screen, but it should see a new value in that cell.

The Cypress error message above says to solve this as follows: "You typically need to re-query for the element or add 'guards' which delay Cypress from running new commands." For more information, here is a brief and informative video explaining this.

For perhaps a few milliseconds after the user clicks the button, the old value remains on the screen. But a human can't even perceive that. The way I interpret the "guard" Cypress talks about would be like a loading indicator. When the user clicks the button, show a loading indicator, and when the user interface contains the updated data, hide the loading indicator, so that the Cypress test knows it's time to validate the cell values. However, user interface designers typically say "You should use a progress indicator for any action that takes longer than one second". A loading indicator would not be good for user experience (UX).

So you know what testers typically do? They add a wait, cy.wait(1000);, for example, to wait 1 second. And Cypress experts are likely to shame, castigate, deride, and vilify developers who just throw in a wait. Even I have tried really hard to "do the right thing" in the eyes of Cypress experts. But most of the time it has served me better in the short and long term just to throw in the wait statement.

Something seems wrong with the test system if it needs modifications made to the production code to make it easier to test. It reminds me of the time I called to make an appointment with a fortune teller; when she asked me my name, I hung up because shouldn't a fortune teller already know? Shouldn't a test system be able to figure things out on its own?

Again, if the test could see and think like a human, it would not get hung up on this. Humans don't perceive milliseconds. We notice things in seconds. If there were some infinitesimal delay, it didn't matter from an end-user standpoint. We've all seen the capability of ChatGPT, it's high time some of that intelligence worked for us to test our software.

3. Cross-platform app

Integration testing can be especially difficult when using Flutter. Flutter is for multiplatform development and as such it's an abstraction layer on top of many others, including Android, iOS, web, mac, and more. In their rush to get products to market, platform makers seem to develop their testing story as an afterthought. Flutter is no different and in fact, it's worse, making its testing story an after afterthought!

I'll spare you details, and just jump to the punchline on this: Flutter's view is a "black hole" to the Android test framework, Espresso:

Sure, Flutter has its own test framework, but like I said in my post, I could not get it to work in a reasonable amount of time.

Again, if a robot held a device in its robotic hand, it could tap to download the application, install it, create an account, confirm its identity via Two Factor Authentication (2FA), and even send messages to other robots on Android, iOS, web, and tomorrow's platforms. A swarm of these robots could simulate whole workflows.

There must be a better way...

Tapster, “We make robots to test mobile devices." is on to something and I'm looking forward to trying it out. Ultimately though, the majority of issues I've experienced implementing and maintaining test automation would be resolved if testers could ask a robot to help.

Perhaps that's easier said than done, but in a world with robots that can do backflips, why can't a robot just tap and click for us? In a world where we rely on face recognition to secure our money in our banking apps, why can't we train image recognition to verify what's shown on a user interface? In a world with AI that can "think" well enough to convince a Google employee that it should not be turned off, why can't we use AI to figure out how long to wait before validating a screen?

In conclusion, I think if testing were a profession that sprung up today instead of 40-50 years ago, a fresh look at what's possible with today's technology would result in test automation that's as simple as 1, 2, 3:

2. See, hear, read braille, etc. like a human

3. Think like a human...but not too much ;-)